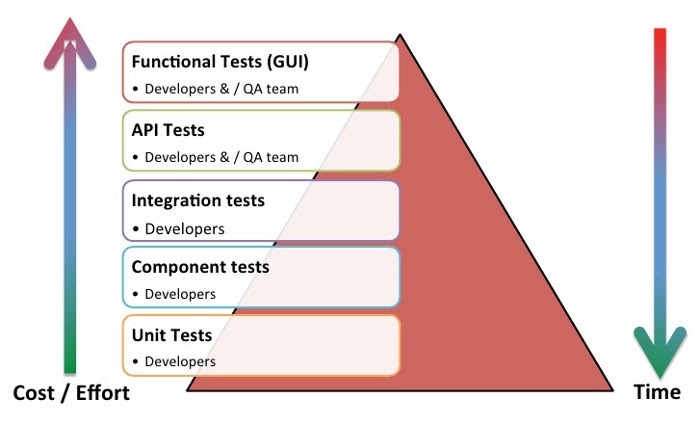

Our Fitradar back-end solution is created based on Clean Architecture as described in this article and explained in this conference talk. One part of the back-end solution is incoming request validation, and in order to implement it as a part of the MediatR pipeline we are using FluentValidation library that replaces built-in ASP.NET Core validation system. At the beginning of the project it seemed that all we have to do is just to create a custom validator derived from AbstractValidator and perform the validation of an Application Model in a single class. And that is how we started to implement the validation. And since the library has quite enhanced capabilities that allow implementing complex validation rules for a while we didn’t notice any problems. Until the moment when we realized that not all validation rules are simple incoming Data Model validation. Some of the rules expressed our business logic rules and restrictions. And so we started to think about splitting the rules across the layers and following are multi step validation solution we came up with:

- Application level validation. At this level incoming JSON data model fields validation is performed. The field types are validated automatically by ASP.NET core built-in Model binding but the other validation rules are executed by FluentValidation including:

- required field validation

- text field length validation

- numeric field range validation

- Domain level validation. At the domain level we apply Domain Driven Design approach and therefore instead of FluentValidation we decided to use Specification pattern. We liked the idea that we can move the validation logic outside the Domain Entity to the separate Validator. By doing that we were able to bind validation logic to incoming commands instead of Domain Entity. For example we have an entity SportEvent and several commands that call methods inside that entity like Update Sport Event and Cancel Sport Event. Now because the different commands have different business requirements for the same Entity property it would be hard to implement them with FluentValidation. For example property BookedSeats in case of Update Sport Event command should be 0, but in case of Cancel Sport Event command it is allowed that someone has already booked a particular Sport Event. And bellow is how the Cancel Sport Event validator looks like:

public sealed class EventCancellationValidator : EntityValidatorBase

{

public EventCancellationValidator()

{

AddValidation("CancellationTimeValidation",

new ValidationRule<SportEventInstance>(

new Specification<SportEventInstance>(sportEvent =>

sportEvent.StartTime > DateTime.UtcNow),

ValidationErrorCodes.CANCELLATION_TOO_LATE, "It is not possible to cancel the

Sport Event after it has started", "SportEventStartTime"));

}

}- Database level validation. For accessing database we are using Entity Framework Core and to make sure the database models are in tune with database constraints we are using built in property attributes and classes that implement IEntityTypeConfiguration interface that allows to define database constraints like Primary Key, Foreign Key and Unique Key.

P.S. Please follow our Facebook page for more!